Research Projects

This project is aiming to develop a tool to support students in acquiring mathematical competence

in linear algebra. Therefore, we aim to give the student suitable feedback during his work on an exercise.

The tool takes the full application of the relevant rules out of the hands of the student.

This way he can not make any calculation errors anymore and he saves a lot of time

on tedious calculations. But much more important, we hope that he now realizes

that he has to determine which rules to apply, instead of just applying one that seems to fit the

current mathematical formula: it is the mathematical competence that matters and not

just understanding of the procedures.

Furthermore, because the student indicates which steps he makes, the system can

follow his steps, enabling feedback on his path towards a solution, and to

give him hints to increase his mathematical competence. Figures 1 and 2 show an example problem,

the first step towards a solution, a selection in the second step and the rules applicable

for that selection.

| Figure 1. Working window, where linear algebra formulas are manipulated. | Figure 2. Rule window, to select rules that are applicable to the selection made in the working window. |

The system also supports more theoretic problems (Figure 3).

The current formula layout seems not yet optimal. In following stages in the project,

these interfacing issues will be explored in more detail.

Furthermore, we are now in the position to start with the heart of the work: the feedback.

| Figure 3. First few steps of a more abstract problem. |

Running since second half 2005 at 0.3FTE per month.

This project is funded by Delft University of Technology.

Current massive ad-hoc agent worlds leave the user lost between the services.

In this project we developed a way to support human users to find the service they need.

We built on top of existing methods for robust natural language processing and agent technology.

A key part of our approach is a service matcher agent that

helps the user with this task. We also proposed an 'architecture' suited for ad-hoc networks.

Our architecture mainly consists of a set of detailed negotiation standards (ontologies and FIPA style protocols).

The architecture brings context information into the ad-hoc agent world (see figure below).

It enables context dependent searches in a broad sense:

the user's history, location and gaze direction, task relations,

and preferred tasks/services are all considered in the search for a matching service.

The figure below shows the interface to the service matcher. Here the user can type his request/needs

in natural language. Of course other natural language input methods, for instance handwriting, could

be used instead.

The service matcher agent

then tries, considering the current context, to find an agent that understands and

can handle the request. Because of the ad-hoc aspects of network and agents,

our architecture uses separate distributed, lightweight natural language parsers for each agent.

| Small part of an ad-hoc agent world. Purple blocks are agents of various types. Arrows indicate location relations ("In") and task relations ("StepOf"). | Interface to the service matcher agent. |

Finished. Ran between 2001 and 2005. Two papers on performance tests with human users are accepted and waiting publication.

The project was mainly funded by the National Freeband Knowledge Impulse.

Delft University of Technology and TNO made smaller contributions.

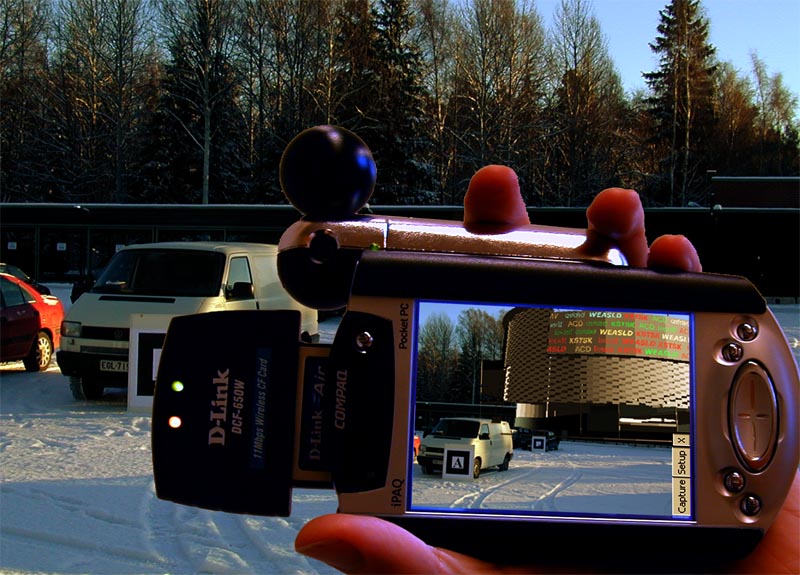

The NISHE project was a short project to realize augmented reality with large virtual models on a PDA. We focused on an architecture scenario, such that architects can show their designs to the customer at the real location.

| PDA showing a parking lot augmented with a new building. | Simplified architecture of the NISHE system. Transparent overlay images are calculated at a remote server. |

We have a video nicely illustrating the system at work, projecting a new building over the nearby parking lot. Click on the image to start the video.

Finished. The project ran between november 2002 and march 2003.

The project was financed by the Multimedia Group at VTT Finland.

In the Ubiquitous Communications project we did research on augmented reality for

wearable systems. We developed a latency layered rendering architecture

to enable AR on a wearable platform, with highly limited

resources as CPU and memory.

The inner layer had only 8 milliseconds latency,

which was including both rendering and displaying.

This was achieved by rendering just ahead of the raster beam.

The inner layer had only 8 milliseconds latency,

which was including both rendering and displaying.

This was achieved by rendering just ahead of the raster beam.

Higher layers simplified the scene appropriately for the lower layers,

fitting their resources. We analysed the available simplification methods

that could reduce required resources, and made a mathematical model that could estimate

the resource requirements at lower levels.

| Simplification methods. Clockwise, starting at top left: original complex 3D object, 3D simpified object (red), meshed imposter (cyan), layered depth image (purple) and simple imposter (green). | Mathematical model estimating required resources (here bandwidth requirements). Colors represent different simplification techniques (left figure). |

| We built a "portable" demonstrator that could render a statue on the campus, using these techniques. | Video explaining our approach. Click on image to play. The full video is available at the ubicom website. |

Finished. Ran between 1997 and 2001.

The project was financed by Delft University of Technology, as one of the DIOC interdisciplineary 'spear head' projects..