Abstract

Nowadays, an ever increasing number of sensors surround us that collect information about our behavior and activities. Devices that embed these sensors include smartphones, smartwatches, and other types of personal devices we wear or carry with us. Machine learning techniques are an obvious choice to identifying useful patterns from this rich source of data. Here, we briefly describe the challenges that occur when processing this type of data and discuss what might be promising avenues for future work. This paper draws inspiration from a book we have recently written that will be published by Springer shortly [1].

1 Introduction

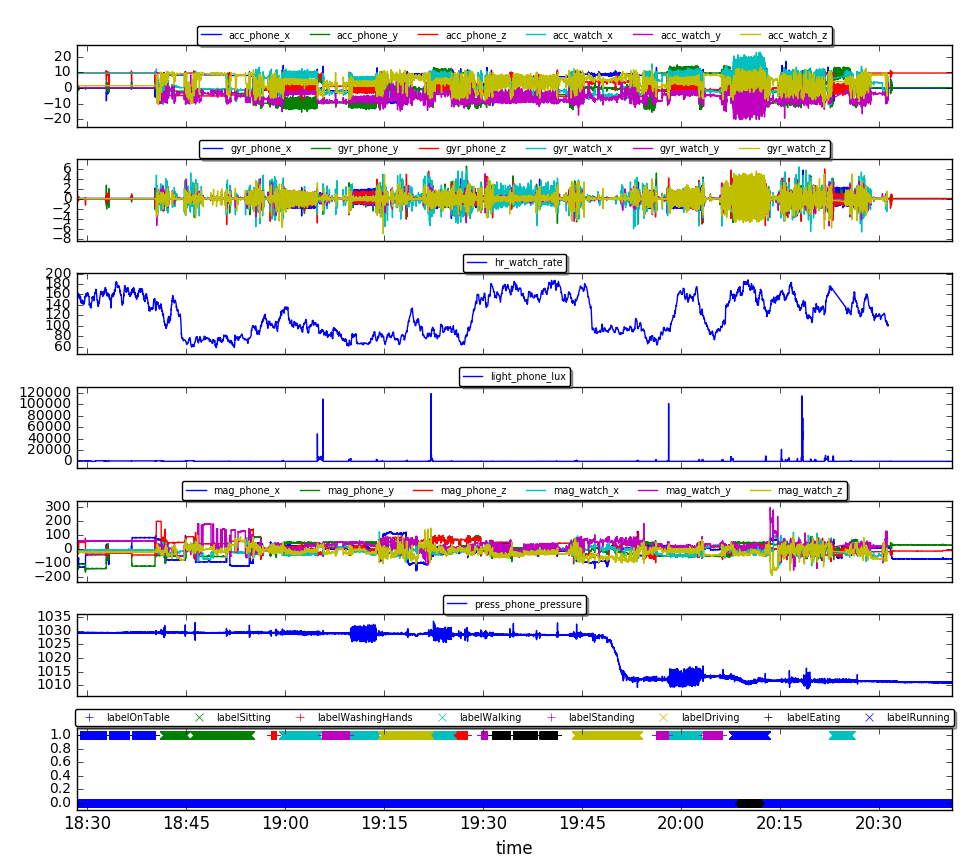

Have you ever thought about the huge amount of sensor data your smartphone generates about you? A typical smartphone includes sensors such as an accelerometer, a magnetometer, a gyroscope, a GPS tracker, and a light sensor. It registers the apps you are using, the phone calls you are making, and can be used to ask questions about your current mindset. And this is only the smartphone, we can name a multitude of other devices that collect data from sensors that surround us. An illustrative dataset is shown in Figure 1, taken from CrowdSignals.

Figure 1: Example quantified self dataset with a variety of sensors and labels on the activity provided by the user

With such sensor information we can gain insight into ourselves, or others can use this information. For the latter, think of a physician or an app that is trying to drive us towards healthier behavior. The quantified self is a term used to describe this phenomenon. The term was first coined by Gary Wolf and Kevin Kelly in Wired Magazine in 2007. Melanie Swam defines the quantified self as (cf. [2]): “The quantified self is any individual engaged in the self-tracking of any kind of biological, physical, behavioral, or environmental information. There is a proactive stance toward obtaining information and acting on it”. To act upon the collected information is far from trivial. The huge amount of data that originates from different sources and the large variation in reliability makes it very difficult to identify the crucial information. Various machine learning techniques can help to pre-process the data, generate models that infer the current state of a user (e.g. the type of activity), and predict future developments (how will the health state evolve). Ultimately, these models enable personalization of software applications by learning the way in which to optimally intervene on an individual user level.

In this brief article, we will explain how the quantified self data is different from other types of data when considering it from a machine learning perspective and discuss the state-of-the-art as well as prominent research challenges.

2 What makes the quantified self different?

While it may seem as just another typical machine learning problem, the quantified self data has a unique combination of characteristics:

1. sensory data is extremely noisy and many measurements are missing

2. measurements are performed at different sampling rates

3. the data has a highly temporal nature and various sources have to be synchronized

4. there are multiple datasets (one per user) which can vary in size considerably

In order to make this data accessible for machine learning approaches, these characteristics often require substantial efforts in data pre-processing. Furthermore, due to the multi-user setting learning has to account for heterogeneity and at the same time enable learning across users quickly.

3 What is the current state of the art?

Activity recognition is definitely the prime example when it comes to machine learning on quantified self data. Ample examples have been reported (e.g. [3], [4]), and lots of datasets have been made available to benchmark new approaches. Most emphasis has gone into the identification of useful features based on sequences of measurements, including features from the time-and frequency domain. In the time domain, features summarize the measured values over a certain historical window while in the frequency domain one tries to distill features on the assumption that certain sensory values contain some periodicity (e.g. walking results in periodic accelerometer measurements at a frequency around 1Hz). Reported accuracies are as high as 98% (cf. [4]).

Recently, also some work (see e.g. [5]) is seen in machine learning that tailor interventions using reinforcement learning: how can we support the user better towards his/her goals? Here, learning quickly from only a few data points is also key to avoid the disengagement of the user.

4 What are the research challenges?

There are still many opportunities for research, here are a few we consider to be of importance:

1. personalize applications: often, the current research focus is on prediction that are rarely used to inform decisions. In future, the focus should shift towards learning how to best support the user based on the data we collect and the feedback we obtain from the user. We should to this safely (i.e. not provide harmful suggestions) and efficiently (with limited experiences needed per user).

2. handle heterogeneity: we should be able to learn across different devices with different capabilities and sensors and also learn over different users. Additionally, if users carry multiple devices at the same time, they should act in a symphony, e.g. providing a message on a smart watch rather than on a phone when a person is in a meeting.

3. more effective predictive modeling: currently a lot of effort is spent on identifying useful features, effort should focus on deriving the most informative ones automatically (e.g. using deep learning). In addition, embedding of domain knowledge to avoid learning known information, exploiting the temporal patterns better, and also being able to explain the resulting models are key to bring the domain further.

4. do validation: while there are lots of apps that exploit the data from the quantified self, very few are evidence-based (cf. [6]). The effectiveness of apps should be studied better, possibly using more modern forms of evaluation such as A/B testing rather than the classical randomized controlled trials.

5 Conclusions

The area of Machine Learning for the Quantified Self is a challenging and exciting area within Artificial Intelligence. It comes with its own unique characteristics, resulting in lots of open issues that still need to be addressed. Of course, these developments will also come from closely related fields and cooperation will be mutually beneficial. An example is the area of predictive models using Electronic Medical Records, which exhibit strong similarities in terms of the type of data and the goals.

References

[1] Mark Hoogendoorn and Burkhardt Funk. Machine Learning for the Quantified Self – On the Art of Learning from Sensory Data. Springer, 2017.

[2] Melanie Swan. The Quantified Self: Fundamental Disruption in Big Data Science and Biological Discovery. Big Data, 1(2):85–99, jun 2013.

[3] Ling Bao and Stephen S. Intille. Activity Recognition from User-Annotated Acceleration Data. Pervasive Computing, pages 1 – 17, 2004.

[4] Oscar D. Lara and Miguel a. Labrador. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Communications Surveys & Tutorials, 15(3):1192–1209, 2013.

[5] Huitian Lei, Ambuj Tewari, and Susan Murphy. An actor–critic contextual bandit algorithm for personalized interventions using mobile devices. Advances in Neural Information Processing Systems, 27, 2014.

[6] Anouk Middelweerd, Julia S Mollee, C Natalie van der Wal, Johannes Brug, and Saskia J te Velde. Apps to promote physical activity among adults: a review and content analysis. International journal of behavioral nutrition and physical activity, 11(1):97, 2014.